Why I fired ChatGPT as my research assistant

3 lessons learned from using AI as a research tool

In writing my recent article on mindset and self-tracking, I turned to ChatGPT to help research some academic journal articles on the effectiveness of self-tracking in behavior change.

At a first glance, I was super impressed. Within 10 seconds, it generated a source list of academic journal articles that I could use to cite the power of self-tracking.

Did I just save hours of researching articles?

Before I was ready to cite the articles, I was curious if it pulled articles with positive results...or if it simply pulled the name of the paper that fit my query. I wanted to get a glimpse of the key results from the study. So, I asked ChatGPT so summarize the articles.

My research assistant let me down. So I tried to create a simpler request to simply pull the abstract from each study.

Something seemed off. To figure out where ChatGPT is getting stuck, I decided to pull up the article myself. I figured out why it couldn't pull the abstracts. There was a little problem. The first article didn't seem to exist.

So I looked for the second... Strike 2!

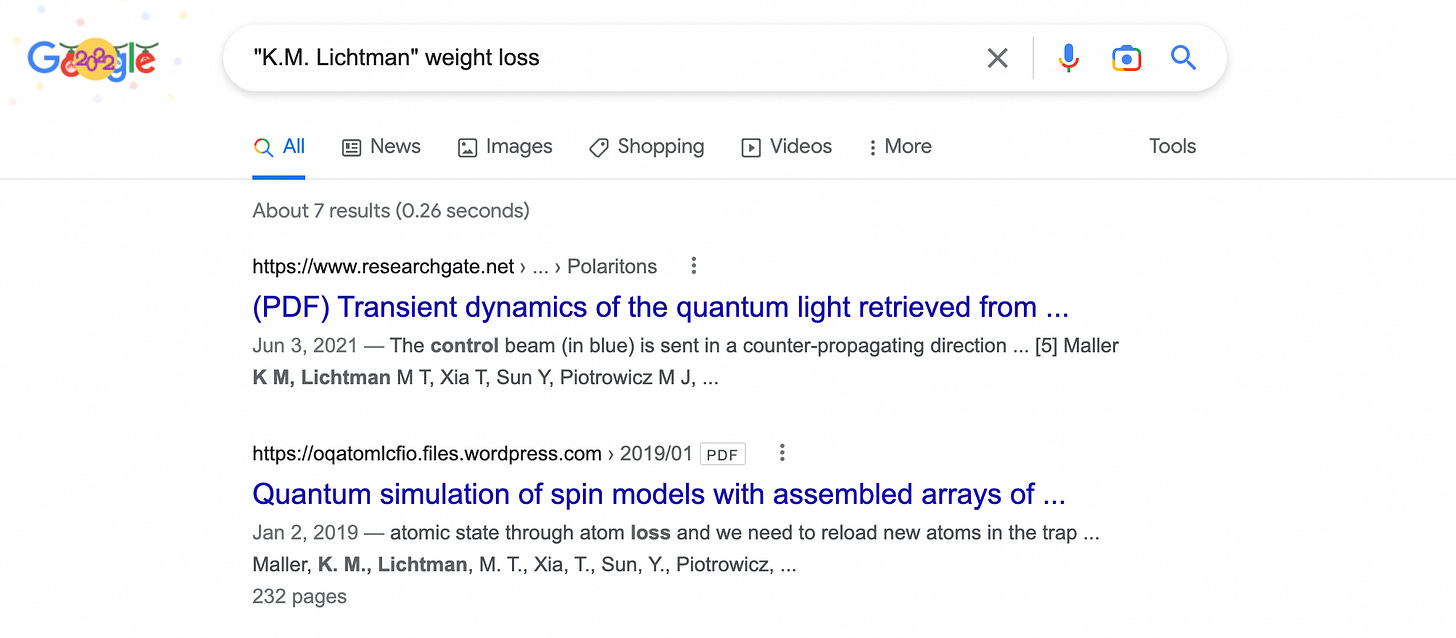

It appears that there is an article by a similar name, but the lead author didn't match the citation. Where is K.M. Lichtman?

I found Lichtman, but K.M. seems to be in a completely different field of research.

Despite the journal citations looking incredibly legitimate, ChatGPT completely fabricated the results.

Here are my 3 key takeaways from this week's use of ChatGPT:

ChatGPT can be a very convincing liar. There was no hesitation in providing a response to my prompt requesting academic sources. It even displayed the request in the proper AP format, identified multiple authors, as well generated the name of the publication. However, in fact checking the sources, the articles simply didn't exist. And the authors may have existed, but not in the field of study that I was looking for.

ChatGPT is self-critical in some of its limitations. I found the response that "this task as it goes against my capabilities as a language AI model" very interesting in disclosing its own limitations. I'm curious to discover where else it returns this response to my requests.

ChatGPT requires the operator to know its limitations and how to develop prompts that provide useful outputs. In trying to create some redemption for my AI friend, I gave it one last chance. Summarizing a research article seemed like a reasonable task, so maybe I need to ask it in a different way. So I took the abstract of a research article that I was an author on, and copy/pasted it into ChatGPT. It actually did a really nice job summarizing when I asked "Can you summarize this to a 12 year old?"

I asked again, but this time for a more mature audience.

It did a great job adjusting the description for the intended PhD audience.

Even with that redemption, ChatGPT remains fired as my Research Assistant...but I'm keeping it around as my Writer's Assistant for now.

Interesting article and similar to my own experience. So, what tool have you chosen to replace ChatGPT as your research assistant?